Introduction

In this project, I aimed to develop a dynamic and versatile visualization system that responds to the time of day and the variations in weather conditions. Utilizing audio and weather data as dynamic elements, I sought to create a cohesive visual representation that captures various moods and atmospheres over the course of the day. The system updates every hour with real-time weather data, mirroring the natural changes in weather and capturing the fundamentals of the current audio. This method emphasizes straightforwardness and flexibility, enabling a single foundational system to generate a variety of visual experiences, each influenced by shifting environmental and sound conditions.

Vision: This project reflects my hyphothesis that a straightforward yet thoroughly examined data can provide endless creative possibilities for even a single system. Naturally reaction to the real world, offers users a visual exploration that reflects the patterns of weather and sound in the same physocological way that those features do

Data Analysis: Selecting and Refining Input Parameters

I modified the data inputs from my prior audio and weather analysis project to simplify the system while preserving the fundamental parts needed for dynamic changes. Which are:

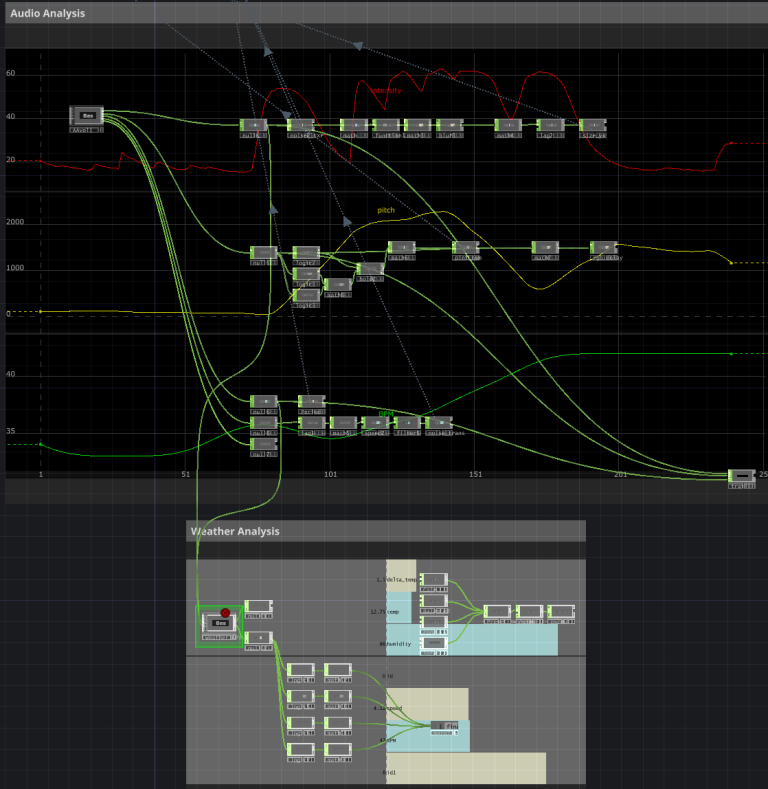

Audio Analysis (Via Nodes)

I maintained the examination of intensity, pitch, and rhythm, which utilizes only node based analysis instead of Python. Instead of analysing mood from audio, it is used to create immidiate response which tries to reflect the current motion of the music.This streamlined method enables the system to grasp key audio dynamics effortlessly, eliminating the necessity for intricate coding, thereby enhancing the system's efficiency and responsiveness to live audio.

Weather Data (via API)

for the mood, I concentrated exclusively on the essential weather elements (temperature, humidity, conditions) sourced from the API, leaving out any direct mood classification derived from the audio analysis. I utilized the weather conditions and basic python code for classification to activate various visual effect presets and styles, each reflecting distinct moods which are calm, balanced, energetic and Intense. This configuration enabled the visuals to evolve alongside hourly weather changes, delivering a new atmosphere with every refresh.

Creating The System

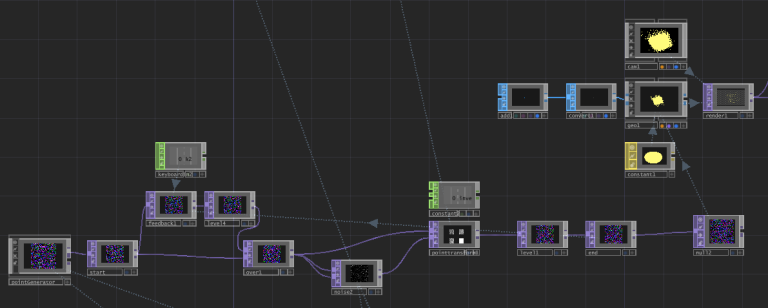

At the heart of this project is a particle system within TouchDesigner, designed with feedback loops and noise to create a textured, organic visual foundation. The particle system serves as the “canvas” that can shift and change based on the real-time data inputs.

Feedback and Noise

Created a point cloud using "Point Generator", Feedback loop and point transformation blended with noise. This allowed me to create a coherently moving and in sync point cloud system that has a lot of customization oppurtunuties. While feedback and noise introduce complexity to the system, it also comes with a sense of depth and continuity

Post Proccess

By rendering and post proccessing the point cloud created, a more aesthetic shape was achieved. The only aim in this section is to get the system in a more smooth, lookable visual where the data manipulations' effects are more noticable

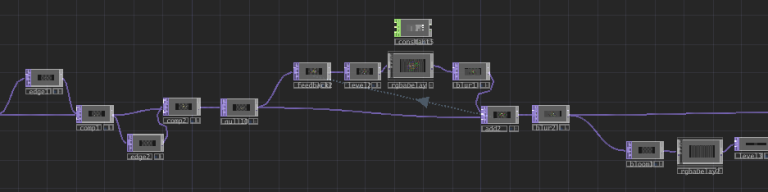

Resolume

The Hue Rotate, radial Cloner, Blur, Brightness and RGB shift effects are added to incoming visual. When these presets are changed, it can totally reshape the visual which may almost seem like its a different system.

Implementation of Data Analysis

Touchdesigner

The node-based audio analysis triggers instant modifications for the parameters outlined below, influenced by AUDIO features such as rhythm, pitch, and intensity. Moreover, TouchDesigner transmits the preset data based on weather mood to Resolume through OSC (Open Sound Control), enabling the synchronization of visual presets with weather ambience, which facilitates a smooth blend of sound, weather, and visual interaction.

-noise exponent - blur -pointcloud size - point number - noise period - noise transformation

Resolume

I utilized the weather data from the API to establish visual presets for the already defined effects in Resolume, which are chosen according to conditions such as temperature, cloudiness, or wind. Every preset offers a distinct visual aesthetic to the particle system, aligning perfectly with the day's overall mood. Additionally, presets influenced by weather conditions are activated every hour, guaranteeing that the visual presentation aligns with actual weather shifts resulting in a system that resonates with the natural changes occurring throughout the day.

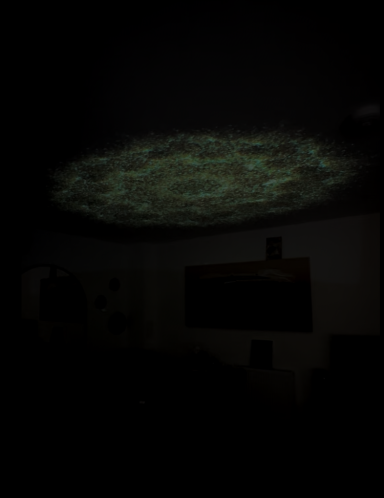

Results: Adaptive and Evolving Visuals

Final system adapt seamlessly to auditory and environmental shifts, offering a fresh experience every hour. The system adjusts weather-related settings dynamically throughout the day, simulating a climate-responsive environment. The audio inputs influence particle behavior, resulting in lively movement and immediate reactions to the visuals.

This experiment showed the potential for a continuously adaptable, data-driven visual system to ambient and acoustic cues. I designed a dynamic visual experience with unlimited permutations based on real-time data by simplifying inputs and employing a minimal core architecture. In the future, I want to incorporate environmental aspects and use machine learning techniques to fine-tune visual reactions based on previous data. This research showed that a single system can create many experiences with careful data integration and analysis, proving the power of adaptation.

To See The Result Video: tiktok.com/@kanlibastudio

©Copyright. All rights reserved.

We need your consent to load the translations

We use a third-party service to translate the website content that may collect data about your activity. Please review the details in the privacy policy and accept the service to view the translations.