Introduction

This project delves into the relationship between audio elements and local weather data, aiming at exploring the ambient mood of a given space. This project pushed me beyond my prior experience with audio analysis, letting me to explore the complexities of sound, its emotional resonance, and the ways in which external elements like weather can influence the atmosphere of a space.

I aimed to capture one of eight distinct moods that might reflect the ambience of the room, relying to the combination of sound and weather data. In this piece, I sought to construct a system capable of fluidly adjusting to diverse conditions, engaging with both auditory and environmental changes instantaneously. Yet, this endeavor illuminated the constraints of relying on conventional algorithms with fixed thresholds, revealing the potential necessity for more sophisticated models to attain precise and dynamic mood detection.

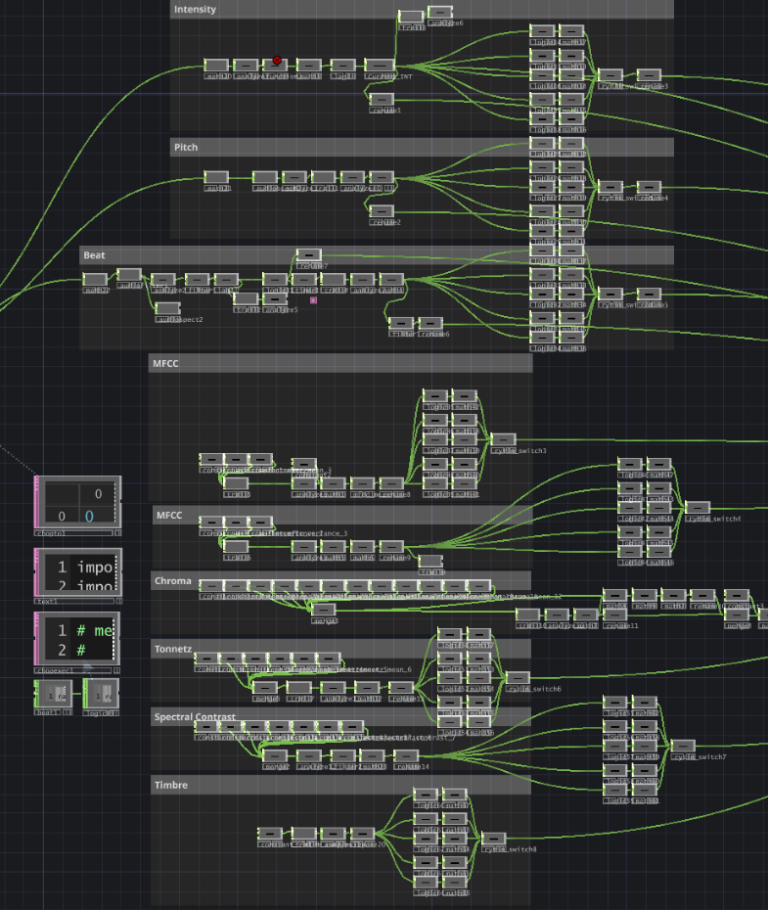

Audio Analysis

To accurately assess an environment's mood, I examined several audio parameters that reveal the sound's emotional tone. I retrieved audio features using Python and the Librosa module to evaluate intensity, rhythm, pitch, mfcc, tonnetz mean, chroma, spectral contrast and timbre . While creating the analysis for intensity, pitch, beat detection and BPM could be done with TD nodes only, the rest needed spesific coding.

The purpose is to tell if the sound was excited, tranquil, or somewhere in between by assessing intensity and rhythm. Pitch and tonality defined the sound's emotional "color," exhibiting mood changes from lightness to depth. Textural and spectral properties revealed how rich or sparse the audio seemed. I wanted to create a sound profile that reflected a space's ambiance by meticulously examining these aspects. This audio analysis method gave me valuable information about the sound's emotional tone, laying the groundwork for connecting it to other environmental data in later stages.

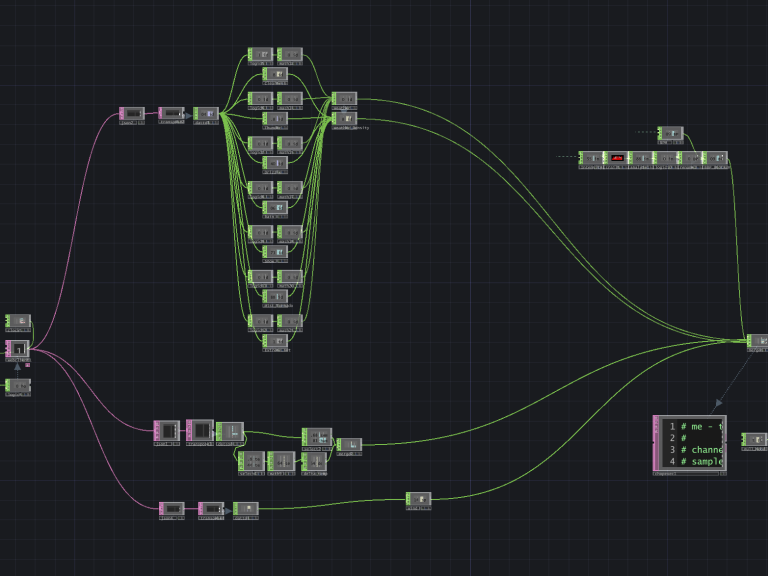

Weather

To improve the mood analysis, I incorporated real-time weather data, introducing an external dimension to the internal dynamics. I collected data from a weather API, focusing on various conditions such as temperature, humidity, wind speed, and angle, along with the general weather status with a three figure number (e.g., sunny, cloudy, rainy). The environmental elements can quietly shape the atmosphere within a space, affecting the perception of audio and altering the overall ambiance.

For example, specific weather patterns such as rain or overcast skies frequently inspire feelings of tranquility or reflection, whereas bright and sunny conditions tend to be linked with increased energy and a sense of optimism. By integrating audio characteristics with meteorological information, I sought to develop a system that reacts to both auditory elements and environmental conditions, offering a deeper and more flexible comprehension of emotional states.

Justifications

Each of the feature was extracted for a spesific purpose, to analyze a spesific side of the ambience. Each has its own justifications that make them apporpriate for this project

Intensity and Rhythm

These elements are crucial for getting the energy of the sound, as high-intensity, fast rhythms often evoke excitement or urgency, while lower intensities and slower rhythms can suggest calmness or melancholy.

Pitch

The pitch helps to define the emotional “color” of the sound. Higher pitches can feel uplifting or bright, while lower pitches add depth and weight, creating a sense of gravity. Pitch analysis is essential for assessing how light or heavy a sound feels in the context of mood.

MFCC

MFCCs capture the timbral quality of sound, providing insight into the emotional texture. These coefficients are valuable for distinguishing between smooth, soft sounds versus harsh, sharp ones, both of which contribute significantly to mood perception.

Tonnetz

By examining the harmonic structure, Tonnetz mean identifies stability and coherence in tonal progression. Harmonically stable sounds can evoke serenity, while opposite may create tension, making this feature important for identifying smooth versus tense moods.

Chroma

Chroma provides an overview of the harmonic content by mapping pitch classes, which adds context to the emotional quality of the sound. It can differentiate between warm, bright sounds a

Spectral Contrast

This feature measures variations between spectral peaks and valleys, which translates into a sense of richness or sparseness in the audio. High contrast can imply intensity and complexity, while low contrast may suggest calmness.

Timbre

A rich, warm timbre might convey comfort, while a cold, sharp timbre might indicate tension or focus, adding another dimension to mood identification.

Weather Condition

Weather conditions provide an external context that can influence mood. For instance, rain or clouds may evoke introspection or calm, while sunny conditions often correlate with positive, energetic moods.

Temperature

Temperature can impact perceived comfort, with moderate temperatures often aligning with a relaxed or neutral mood, while extreme temperatures may influence feelings of discomfort or agitation.

Humidity

Humidity affects how “heavy” the environment feels, with higher humidity often leading to a sense of sluggishness or introspection. Lower humidity, on the other hand, can feel light and refreshing, aiding in assessing relaxed versus weighted moods.

Wind Speed and Angle

Wind contributes to the dynamism of the environment; higher wind speeds may indicate movement and energy, while calm, low-wind conditions promote stillness. The direction and intensity of the wind provide context aswell.

Limitations and Conclusion

By combining audio and weather data, I focused on pinpointing one of eight possible moods that would capture the essence of the room's atmosphere. By charting distinct patterns in audio and environmental data to mood categories—like relaxed, energetic, introspective, or vibrant—I sought to develop a system capable of adjusting to both sound and surroundings in real-time. By employing conventional Python algorithms alongside threshold values, I started to classify these patterns to produce a mood that most accurately reflected the conditions.

This method established a strong base, yet it also highlighted the complex nature of mood detection, given that moods are shaped by nuanced changes in audio and environmental data. This observation highlighted the opportunity for more dynamic modeling, enabling responsiveness to real-time changes and subtle emotional variations. Even though with constant treshholder values and a python code insidemy laptop I could createan estimation, It does not come close to a system that can utilize higher performance equipment, dynamic treshholders and an AI for determination. With this in mind, I discovered fresh opportunities to improve the system through machine learning methods, allowing for a more adaptable and responsive mood analysis.

Finally, this exciting study examined audio-environmental data fusion for adaptive mood analysis. I studied iaudio and real-time weather to understand how sound and surroundings effect ambiance. The approach showed data analysis and real-time mood interpretation problems. This study revealed that machine learning can create a dynamic, responsive mood-detection system. These insights enable more sophisticated, adaptive environments that naturally respond to sound and context.

©Copyright. All rights reserved.

We need your consent to load the translations

We use a third-party service to translate the website content that may collect data about your activity. Please review the details in the privacy policy and accept the service to view the translations.