Role

Tools

Duration

Output

Date

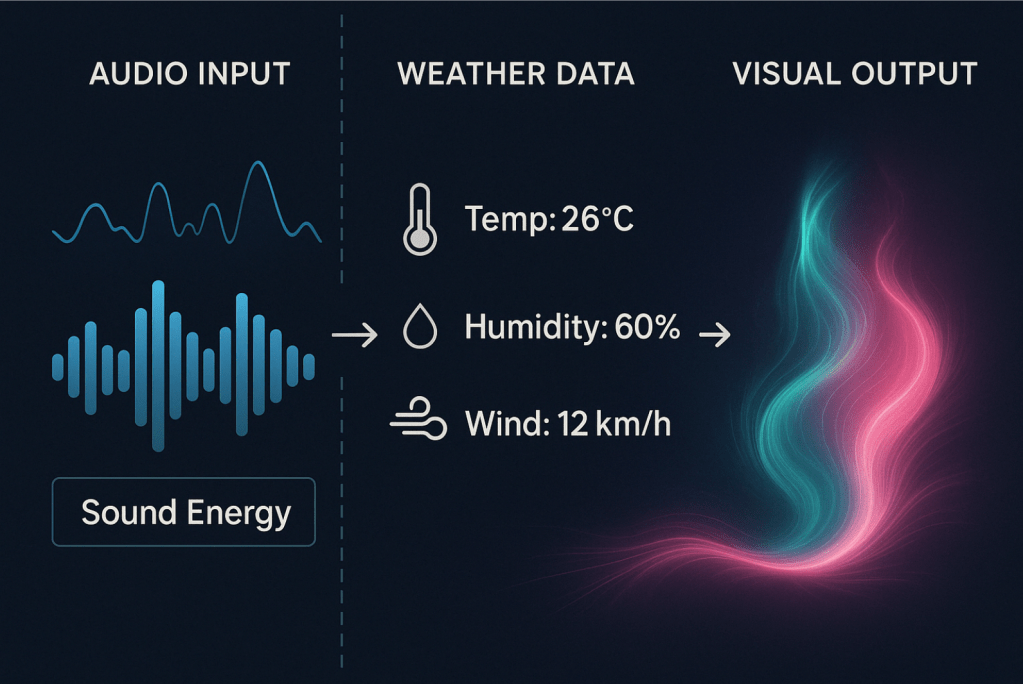

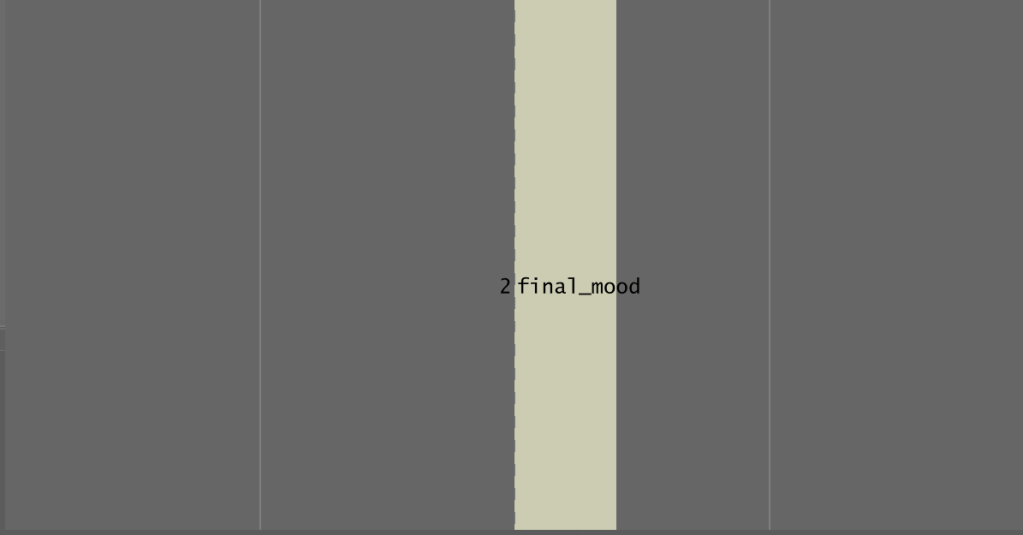

What if your surroundings could feel the temperature of the world outside—and respond with a mood? This project explores how local weather data and audio input can be combined into an ambient visualization system designed to express and mirror emotional tone through visuals. By mapping real-time weather conditions—temperature, humidity, wind—and combining them with audio features like rhythm, pitch, and timbre, I built a system that interprets mood.

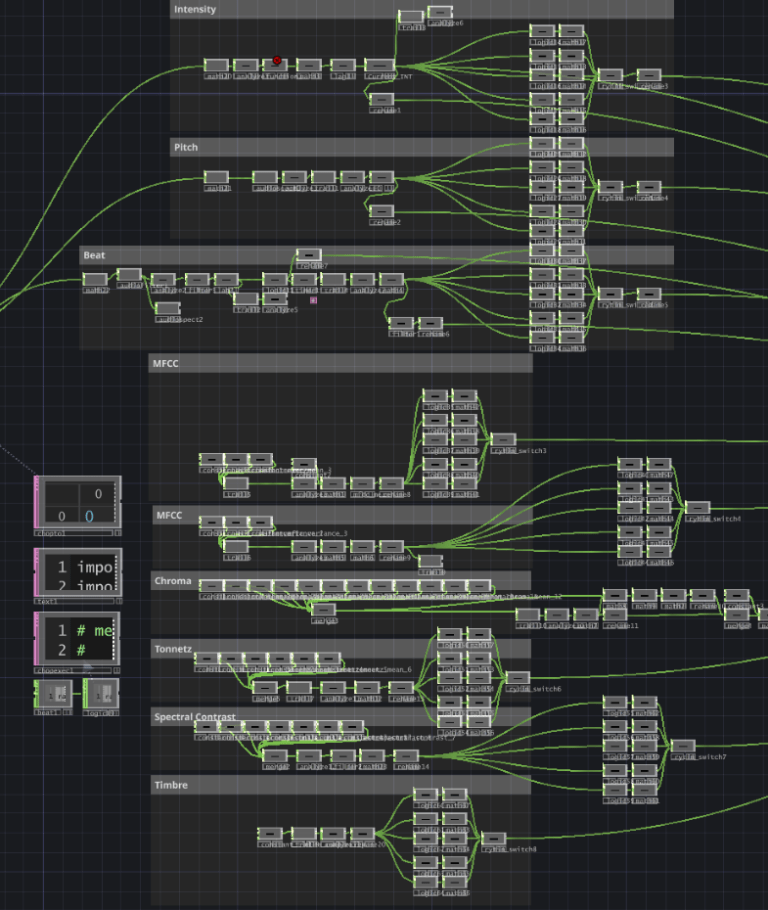

Intensity – Pitch – MFCC – Tonnetz – Chroma – Spectral Contrast – Timbre – Weather Condition – Temperature -Humidity – Weed Speed And Angle

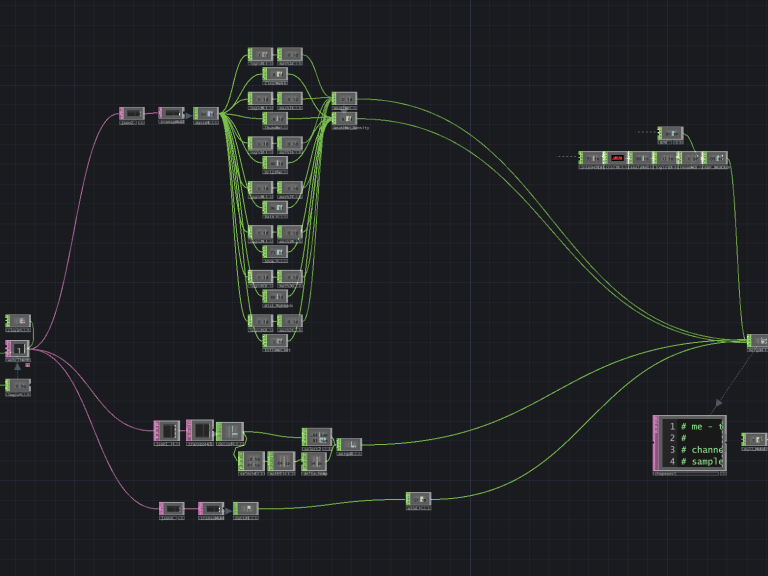

Audio In → Feature Extraction → Emotional Mapping

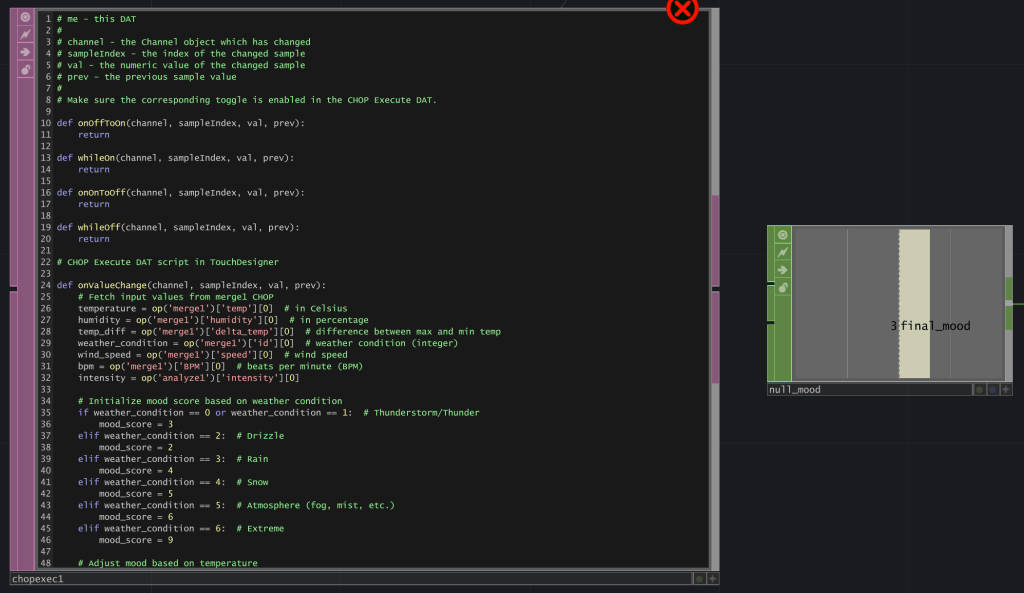

Weather API → Environmental Normalization → Mood Merge → Visual Logic

Rhythm, pitch, MFCCs, and timbre are some of the emotion-related characteristics that can be extracted by the audio analysis module.

The audio-side input of the system’s emotional logic was created by mapping each characteristic to a signal that was related to the mood of the individuals.

Using a live weather API, temperature, humidity, and wind data were normalized and fed into the system.

This project deepened my understanding of how to merge perceptual data from two seemingly unrelated sources: weather and sound. By building a model that interprets emotional resonance from both, I learned how ambient systems can respond like living organisms—adapting tone, rhythm, and presence based on their environment.

Key Outcomes:

- Developed dual-path mood model for sound + weather

- Extracted MFCCs, pitch, and timbre to classify audio emotion

- Integrated real-time weather data via Python APIs

- Tuned system to produce emotional ambient feedback

This system serves as a prototype for emotionally aware environments—where external weather conditions and internal sonic energy come together to shape ambient visuals in real time. TouchDesigner allowed for deep integration of sound analysis and API-based data parsing, making the final output both technically rich and aesthetically adaptable.