Role

Tools

Duration

Output

Date

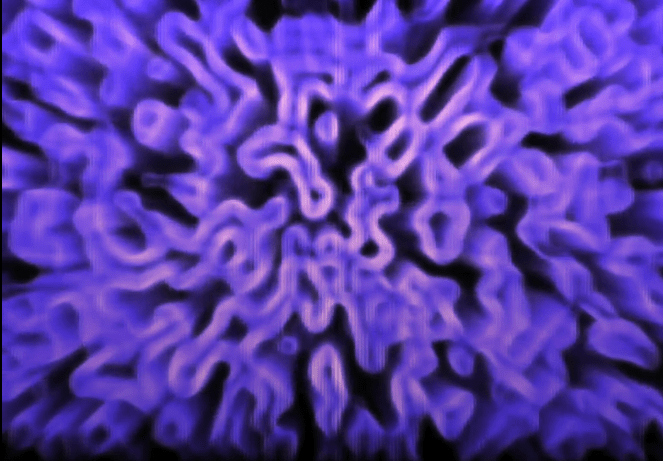

This project explores how tactile interfaces—specifically MIDI controllers—can transform real-time generative systems into responsive visual instruments. The goal was to develop a macro-mapped control logic that allows a single physical interaction to modify multiple parameters simultaneously, creating complex visual shifts with minimal effort. This opens the door to VJ performances, interactive installations, and live audiovisual sets where expression is fluid, fast, and intuitive.

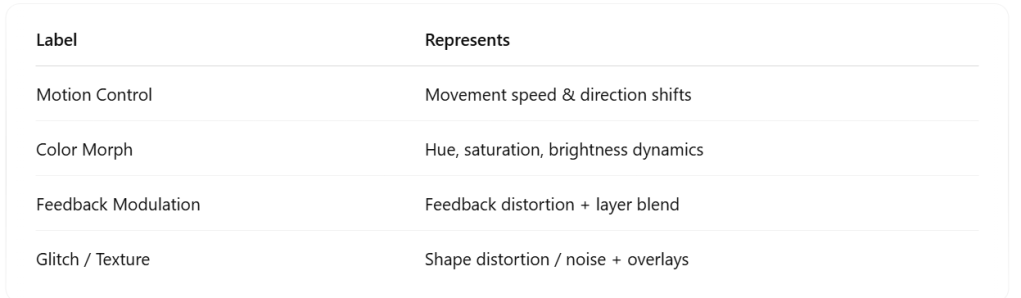

Each fader or knob was macro-mapped to influence multiple visual dimensions—such as shape, movement, feedback, color depth, and speed—all at once.

MIDI Knobs/Faders → Multi-Parameter Mapping → Visual Engine → Projection

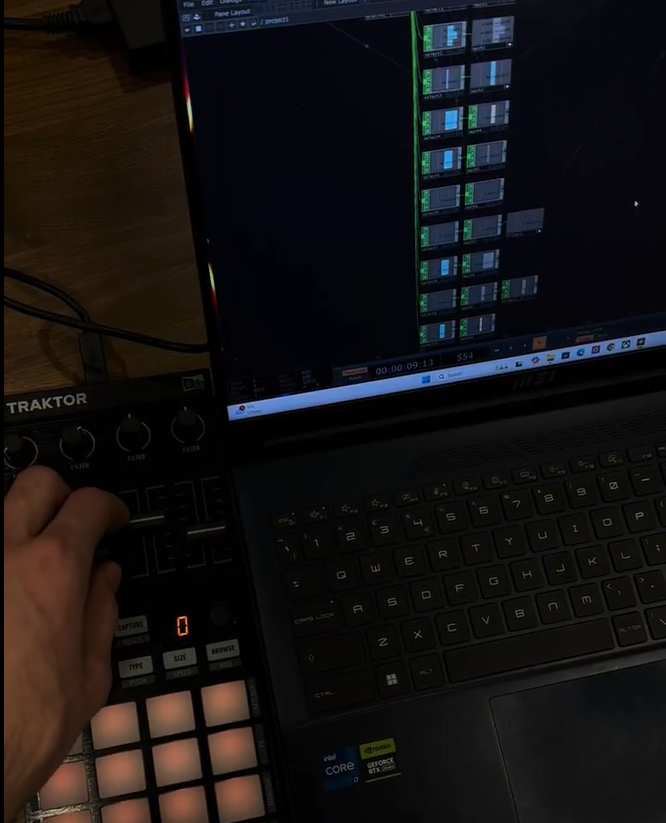

TouchDesigner was used to map physical MIDI input to multi-dimensional generative controls.

Each interaction triggered bundled parameter changes, allowing for deep manipulation with minimal effort—ideal for high-tempo or solo VJ setups.

This created a system where motion, speed, shape distortion, color ramping, and glitch behaviors could be manipulated in real-time, all from a tactile interface.

Resolume was introduced to experiment with routing MIDI-mapped values into layered post-process effects.

This extended the system’s capabilities—offering macro control across both TouchDesigner’s procedural engine and Resolume’s VFX stack.

This system was designed to support creative flow with minimal friction. By distilling complex effects into simplified hardware gestures, the project emphasized the power of smart control systems in generative visual performance.

Key Outcomes:

- Designed macro-mapped control scheme using MIDI

- Integrated real-time generative visual system in TouchDesigner

- Extended MIDI routing into Resolume for layered visuals

- Created a modular base for scalable VJ / interactive setups

This project presents a foundation for live, adaptive visual control, empowering performers and artists to do more with less—redefining visual manipulation as a tactile, expressive experience. It demonstrates how generative visuals can be sculpted in real-time with smart interfaces—blurring the line between coding and performing.