Role

Tools

Duration

Output

Date

This project investigates how ambient visual systems can be made self-adjusting and context-aware. By combining weather APIs and real-time audio analysis, I designed a generative system that produces visuals based on the atmosphere outside and the sounds inside—shifting constantly across moods and temporal conditions. Rather than reacting to isolated stimuli, the system was built to interpret rhythm, tone, and environmental state as a psychological moment—delivering visuals that evolve naturally as inputs fluctuate.

Integrated flow of sound and weather data into the generative system—modifying color, speed, rhythm, and structure continuously.

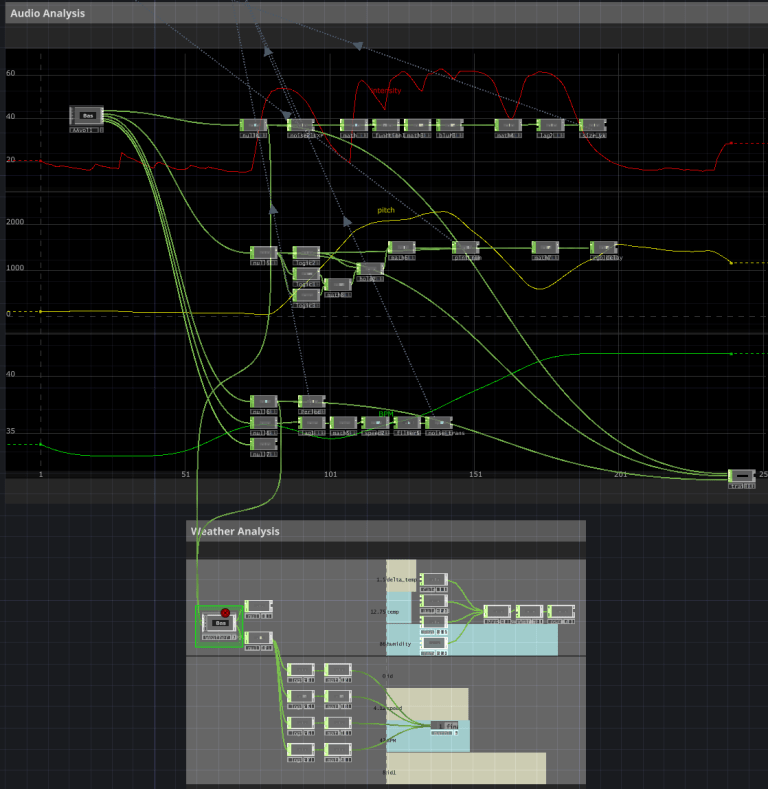

Weather API + Audio Stream → Data Filters → TD Particle Engine → Feedback + FX → Final Visual Output

Audio amplitude and rhythm drove micro-particle behavior, while overall loudness shaped intensity and speed. The system parsed these features through multiple logic branches to maintain balance between momentary expression and gradual shifts.

Weather data was routed through Python APIs and scaled using hourly shifts in temperature, humidity, and cloud cover.

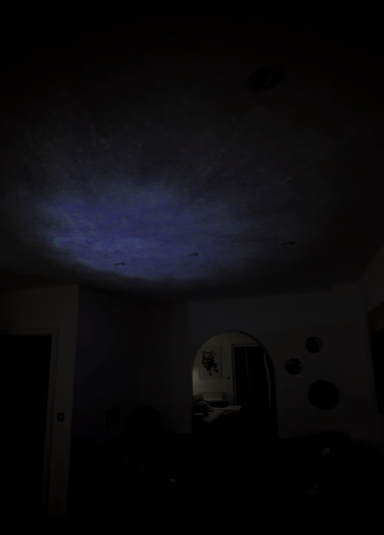

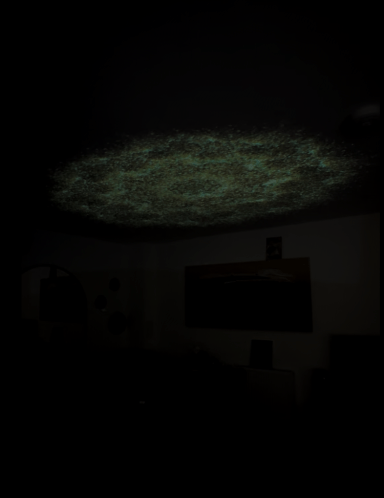

In Resolume, these values altered global effects like contrast, radial blur, and color wash—creating ambient visual states that changed mood throughout the day without direct user control.

This system demonstrated the potential of multi-input ambient environments. By filtering data from both external weather conditions and internal sonic rhythms, the system maintained fluid, perceptually aligned visuals that always felt present—never static.

Key Outcomes:

- Created fully self-adjusting generative visual engine

- Integrated hourly API feeds to shift environment

- Optimized live audio mapping for continuous feedback

- Combined two distinct data domains into one cohesive aesthetic logic

This project is a prototype for emotionally intelligent ambient media—systems that respond to the rhythm of life with subtlety and sophistication.

By tying local weather and internal soundscapes together, the system becomes not just responsive—but alive, evolving every hour into a new visual memory. It offers a path forward for public installations, meditative spaces, or adaptive interiors where media reacts as intuitively as nature itself.